Short version:

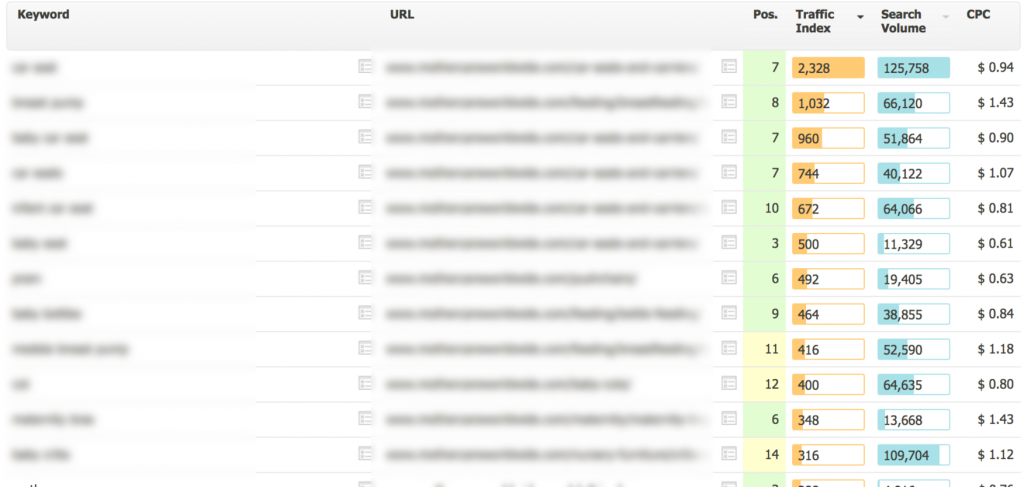

For the $12 cost of a domain, I was able to rank in Google search results against Amazon, Walmart etc. for high value money terms in the US. The Adwords bid price for some these terms is currently around $1 per click, and companies are spendings 10s of thousands of dollars a month to appear as ads on these search results, and I was appearing for free.

Google have now fixed the issue and awarded a bug bounty of $5000.

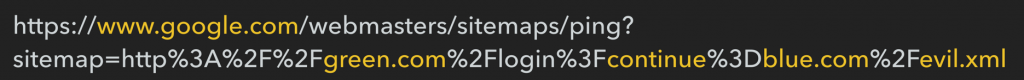

Google provides an open URL where you can ‘ping’ an XML sitemap which they will fetch and parse – this file can contain indexation directives. I discovered that for many sites it is possible to ping a sitemap that you (the attacker) are hosting in such a way that Google will trust the evil sitemap as belonging to the victim site.

I believe this is the first time they have awarded a bounty for a security issue in the actual search engine, which directly affects the ranking of sites.

As part of my regular research efforts, I recently discovered an issue to Google that allows an attacker to submit an XML sitemap to Google for a site for which they are not authenticated. As these files can contain indexation directives, such as hreflang, it allows an attacker to utilise these directives to help their own sites rank in the Google search results.

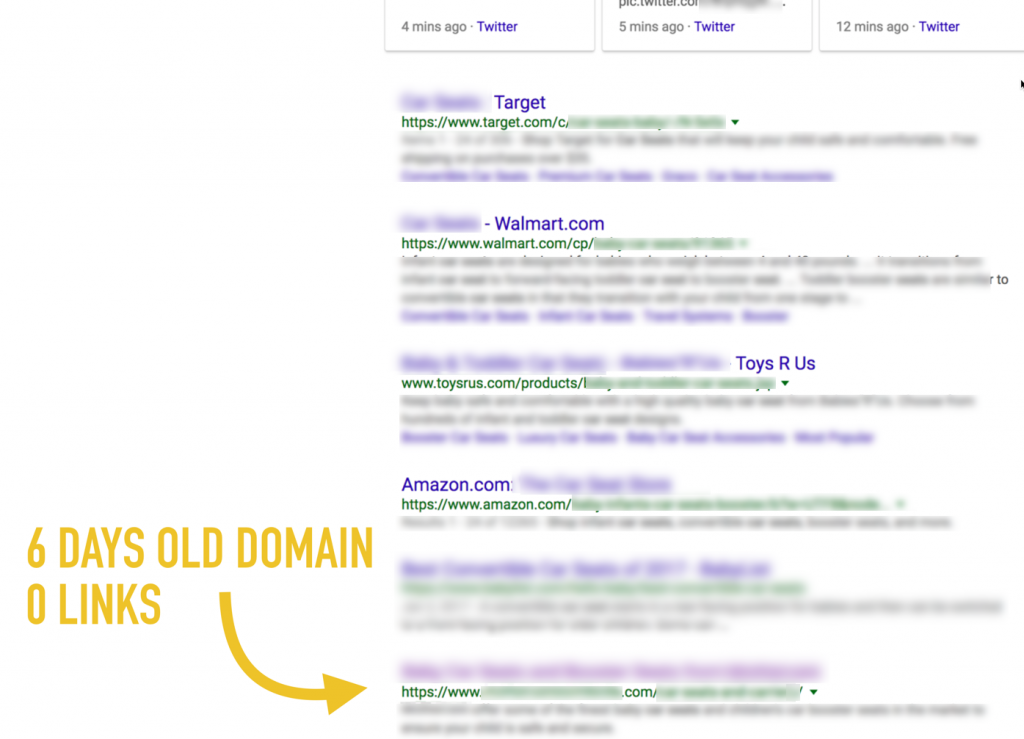

I spent $12 setting up my experiment and was ranking on the first page for high monetizable search terms, with a newly registered domain that had no inbound links.

XML Sitemap & Ping Mechanism

Google allows for the submission of an XML sitemap; these can help them discover URLs to crawl, but can also be used hreflang directives which they use to understand what other international versions of the same page may exist (i.e. “hey Google, this is the US page, but I have a German page on this URL…”). It is not known exactly how Google uses these directives (as with anything related to Google’s search algorithms), but it appears that hreflang allows for one URL to ‘borrow’ the link equity and trust from one URL and use it to rank another URL (i.e. most people link to the US .com version, and so the German version can ‘borrow’ the equity to rank better in Google.de).

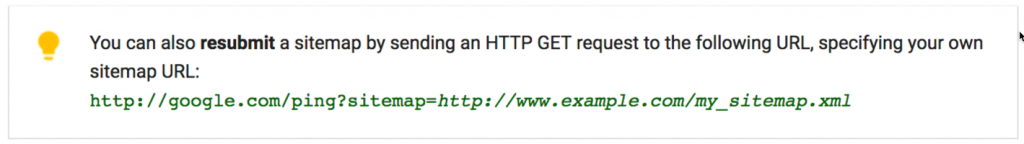

You can submit XML sitemaps for your domain either via Google Search Console, inside your robots.txt or via a special ‘ping’ URL. Google’s own docs seem a bit conflicting; at the top of the page they refer to submitting sitemaps via the ping mechanism, but at the bottom of the page they have this warning:

However, in my experience you could absolutely submit new XML sitemaps via the ping mechanism, with Googlebot typically fetching the file within 10-15 seconds of the ping. Importantly, Google also mention a couple of times on the page that if you submit a sitemap via the ping mechanism it will not show up inside your Search Console:

As a related test, I tested whether I could add other known search directives (noindex, rel-canonical) via XML sitemaps (as well as trying a bunch of XML exploits), but Google didn’t seem to use them.

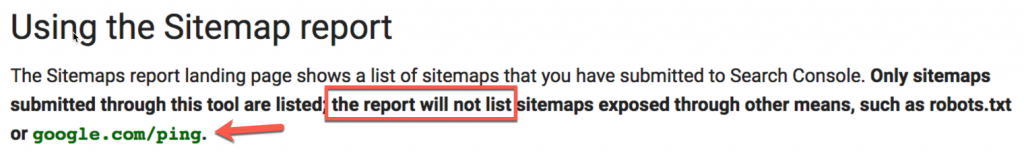

Google Search Console Submission

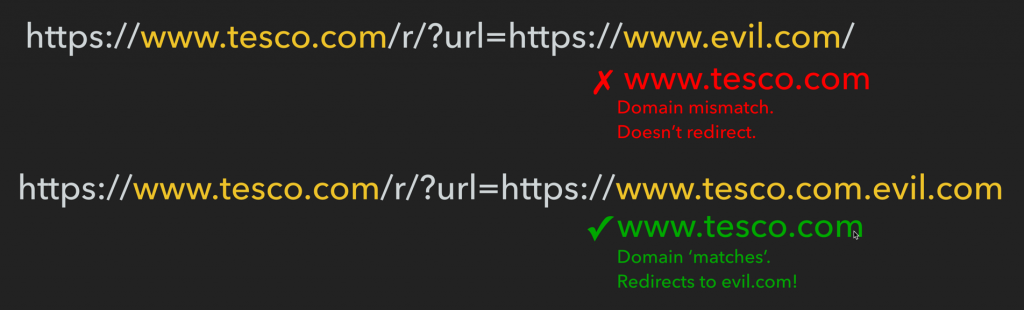

If you try to submit an XML sitemap in GSC, that includes URLs for another domain you are not authorised for, then GSC rejects them:

We’ll come back to this in a moment.

(Sorry, Jono!)

Open Redirects

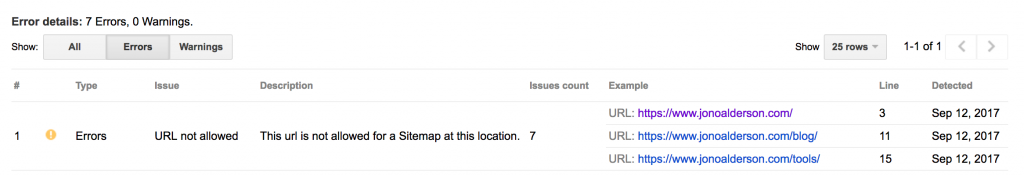

Many sites use a URL parameter to control a redirect:

In this example I would be redirected (after login) to page.html. Some sites with poor hygiene allow for what are known as ‘open redirects’, where these parameters allow redirecting to a different domain:

![]()

Often these don’t need any interaction (like a login), so they just redirect the user right away:

![]()

Open redirects are very common, and often considered not too dangerous; Google does not include them in their bug bounty program for these reasons. However, where possible companies do try to protect against these, but often you can circumvent their protection:

Tesco are a UK retailer doing more than £50 billion in revenue, over a £1 billion of which via their website. I reported this example to Tesco (along with a number of others to other companies that I discovered during this research) and they have since fixed it.

Ping Sitemaps via Open Redirects 😱

At this point, you may have guessed where I’m going with this. In turns out that when you ping an XML sitemap, if the URL you submit is a redirect Google will follow that redirect, even if it is cross domain. Importantly, it seems to still associate that XML sitemap with the domain that did the redirect, and treat the sitemap it finds after the redirect as authorised for that domain. For example:

In this case, the evil.xml sitemap is hosted on blue.com, but Google associates it as belonging to, and being authoritative for, green.com. Using this you can submit XML sitemaps for sites you shouldn’t have control of, and send Google search directives.

Experiment: Using hreflang directive to ‘steal’ equity and rank for free

At this point I had the various moving parts, but I hadn’t confirmed that Google would really trust a cross-domain redirected XML sitemap, so I spun up an experiment to test it. I had done lots of smaller tests to understand various parts of this (as well as various dead ends), but didn’t expect this experiment to work as well as it did.

I created a fake domain for a UK based retail company that doesn’t operate in the USA, and spun up an AWS server that mimicked the site (primarily through harvesting the legit content and retooling it – i.e. changing currency / address etc.). I have anonymised the company (and industry) here to protect them, so lets just call them victim.com.

I now created a fake sitemap that was hosted on evil.com, but contained only URLs for victim.com. These URLs contained hreflang entries for each URL pointing to an equivalent URL on evil.com, indicating it was the US version of victim.com. I now submitted this sitemap via an open redirect URL on victim.com via Google’s ping mechanism.

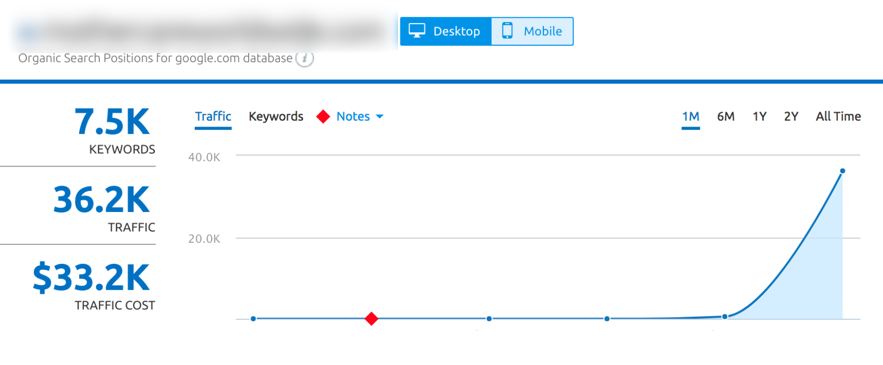

Within a 48 hours the site started getting small amounts of traffic for long tail terms (SEMRush screenshot):

A couple more days passed and I started appearing for competitive terms on the 1st page, against the likes of Amazon & Walmart:

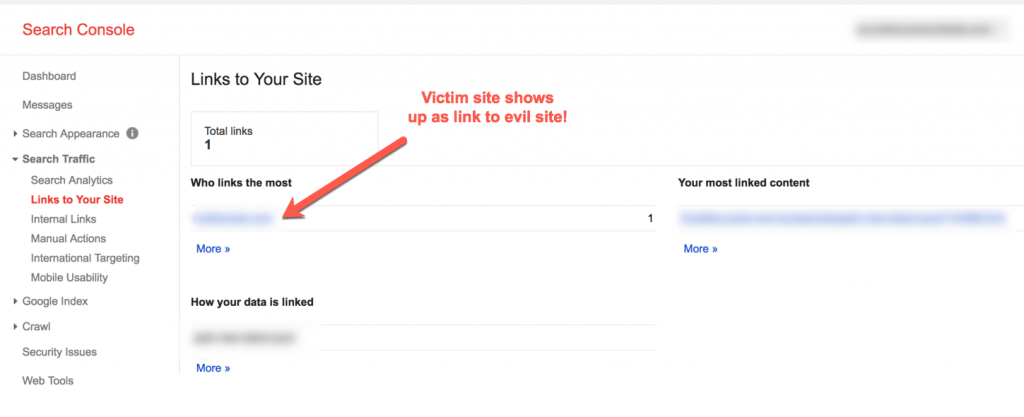

Furthermore, Google Search Console for evil.com indicated that victim.com was linking to evil.com, although this obviously was not the case:

At this point I found I was also able to submit XML sitemaps for victim.com inside GSC for evil.com:

It seemed that Google had linked the sites, and evil.com’s search console now had some capabilities to influence victim.com’s setup. I could now also track indexation for my submitted sitemaps (you can see I had thousands of pages indexed now).

Searchmetrics was showing the increasing value of the traffic:

Google Search Console was showing over a million search impressions and over 10,000 clicks from Google search; and at this point I had done nothing other than submit the XML sitemap!

You should note that I was not letting people check out on the evil site, but had I wanted to, at this point I could have either scammed people for a lot of money, or setup ads or otherwise have begun monetising this traffic. In my mind this posed a serious risk to Google visitors, as well as a risk to companies relying on Google search for traffic. Traffic was growing still, but I shut my experiment down and aborted my follow up experiments for fear of doing damage.

Discussion

This method is entirely undetectable for victim.com – the XML sitemaps don’t show up on their end, and if you are doing what I did and leveraging their link equity for a different country, then you could entirely fly under the radar. Competitors in the country are operating in would be left quite baffled by the performance of your site (see above where I’m in the search results as Amazon, Walmart & Target, who are all spending significant resources to be there).

In terms of Black Hat SEO, this had a clear usage, and furthermore is the first example I’m aware of of an outright exploit in the algorithm, rather than manipulating ranking factors. The severity of potential financial impact of the issue seems non-trivial – imagine the potential profit from targeting Tesco or similar (I had more tests to run to investigate this more but couldn’t without potentially causing damage).

Google have awarded a $5000 bounty for this, and the Google team were a pleasure to deal with, as always. Thanks to them.

If you have any questions, comments or information you can contact me at [email protected], on Twitter at @TomAnthonySEO, or via contacting me via Distilled.

Disclosure Timeline

- 23rd September 2017 – I filed the initial bug report.

- 25th September 2017 – Google responded – they had triaged the bug and were looking into it.

- 2nd October 2017 – I sent some more details.

- 9th October – 6th November – some back and forth status updates.

- 6th November 2017 – Google said “This report has been somewhat hard to determine on what can be done to prevent this kind of behavior and the amount of it’s impact on our search results.I have reached out to team to get a final decision on your report. I know they have been sifting through the data to determine how prevalent the behavior that you described is and whether this is anything immediately that should be done about it.”

- 6th November 2017 – I replied suggesting they don’t follow cross-domain redirects for pinged sitemaps – there is little good reason for it, and it could be a GSC only feature.

- 3rd January 2018 – I asked for a status update.

- 15th January 2018 – Googled replied “Apologies for the delay, I didn’t want to close this report earlier, because we were unable to get a definitive decision, if it would be possible to address this behavior with the redirect chain without breaking many legitimate use cases. I have reached back out to the team reviewing this report to get a final answer and will update you with their response this week.”

- 15th February 2018 – Google updated to let me know a bug had been filed on the report, and the VRP board would discuss a bounty.

- 6th March 2018 – Google let me know they had awarded a bounty of $1337.

- 6th March 2018 – I shared a draft of this post with Google and asked for green light to disclose.

- 12th March 2018 – Google let me know they hadn’t completed the fix, and asked me to hold off.

- 25th March 2018 – Google confirmed the fix was live, and gave me green light to post.

- 17th April 2018 – Google contacted me again to say they had upgraded the bounty amount to $5000. 🙂

84 responses to “Google exploit via XML Sitemaps to manipulate search results”

Really interesting post – thanks for sharing Tom! Surprising that it took so long for Google to fix (and that you had to follow up with them!).

Awesome! Great Share bro!

WTF! Wonder that Google took 6 month to get it fixed. They should offer more money :p . BTW Wonderful Post!

Probably they’ve forgot about 3-4 zeros at the end… I don’t get their stinginess. He would earn more by using it for 1 day…

Exactly, this companies are getting really cheap with their bounties. And they wonder why there are more and more people trying to exploit this kind of things.

With this low ball values, maybe next time he will sell this exploit for 6 months while they decide to fix it or not

Btw good joob

Very interestig the way hreflang operates

$1337

The guy in charge knows about the dark-side spirit and code 🙂

Man, you miss sharing buttons, I like to share original sources because it is the really cool experiment! Thanks a lot!

Hello

thanks Tom for sharing !

you have to wait a lot, for google to fix it !!

Great post and experiment!

I would have been tempted to keep this to myself if I were you. Kudos to you!

I hope you made a few hundred k out of it before the bug got fixed 😉

great job.! tech geek:)

Hello,

Amazing post 🙂

Black Hat still remain a vast world !

Now after google has fixed the bug, should website owner fix their urls too (As Tesco ) ?

Wow, great read and methodology. Got to say the bug bounty seems on the low side. Thanks to Rand for tweeting about this!

Jeez, how did you even think to try that??? Amazing find. Nice work!

Dude, this is pretty neat discovery and a good story!

This was super interesting and insightful, thanks for writing it Tom. Google was a little slow to this, if you were a blackhat you could have made a killing, they def should have given you more bounty 😉 …

You’re are a honest idiot.

Awesome find out and what an interesting read here. A little adventure and very intriguing. Glad that it falls into your hand and not others.

Good find and article. Makes you wonder how they came up with the $1,337 number.

1337= l e e t = e l i t e

What’s great about this is that you choose to report it to Google, not to use it as a kind of blackhat seo! Thanks for the post.